Summary: an open source mini utility for establishing a baseline measurement of Java application TCP latency, a short discussion on the value of baseline performance measurements, and a handful of measurements taken using the utility.

Summary: an open source mini utility for establishing a baseline measurement of Java application TCP latency, a short discussion on the value of baseline performance measurements, and a handful of measurements taken using the utility.We all know and love ping, and in most environments it's available for us as a means of testing the basic TCP network latency between machines. This is extremely useful, but ping is not written in Java and it's also not written with low latency in mind. This is important (or at least I think it is) when examining a Java application and trying to make an informed judgement on observed messaging latency in a given environment/setup.

I'll start with the code and bore you with the philosophy later:

The mechanics should be familiar to anyone who used NIO before, the notable difference from common practice is using NIO non-blocking channels to perform essentially blocking network operations.

The code was heavily 'inspired' by Peter Lawrey's socket performance analysis post and code samples (according to Mr. Lawrey's licence you may have to buy him a pint if you find it useful, I certainly owe him one). I tweaked the implementation to make the client spin as well as the server which improved the latency a bit further. I separated the client and server, added an Ant build to package them with some scripts and so on. Notes:

- The server has to be running before the client connects and will shut down when the client disconnects.

- Both server and client will eat up a CPU as they both spin in wait for data on the socket channel.

- To get the best results pin the process to a core (as per the scripts).

Baseline performance as a useful measure

When measuring performance we often compare the performance of one product to the next. This is especially true when comparing higher level abstraction products which are supposed to remove us from the pain of networking, IO or other such ordinary and 'technical' tasks. It is important however to remember that abstraction comes at a premium, and having a baseline measure for your use case case help determine the premium. To offer a lame metaphor this is not unlike considering the bill of material in the bottom line presented to you by your builder.While this is not a full blown application, it illustrates the cost/latency inherent in doing TCP networking in Java. Any other cost involved in your application request/response latency needs justifying. It is reasonable to make all sort of compromises when developing software, and indeed there are many a corner to be cut in a 50 line sample that simply would not do in a full blown server application, but the 50 line sample tells us something about the inherent cost. Some of the overhead you may find acceptable for your use case, other times it may not seem acceptable, but having a baseline informs you on the premium.

- On the same stack(hardware/JDK/OS) your application will be slower then your baseline measurement, unless it does nothing at all.

- If you are using any type of framework, compare the bare bones baseline with your framework baseline to find the basic overhead of the framework (you can use the above to compare with Netty/MINA for instance).

- Consider the hardware level functionality of your software to match with baseline performance figures (i.e: sending messages == socket IO, logging == disk IO etc.). If you think a logging framework has little overhead on top of the cost of serializing a byte buffer to disk, think again.

Variety is the spice of life

To demonstrate how one would use this little tool I took it for a ride:- All numbers are in nanoseconds

- Tests were run pinned to CPUs, I checked the variation between running on same core, across cores and across sockets

- This is RTT(round trip time), not one hop latency(which is RTT/2)

- The code prints out a histogram summary of pinging a 32b message. Mean is the average, 50% means 50% of updates had a latency below X, 99%/99.99% in the same vain. (percentiles are commonly used to measure latency SLAs)

Same core: mean=8644.23, 50%=9000, 99%=16000, 99.99%=24000Sending and receiving data over loopback is CPU intensive, which is why putting the client and the server on the same core is not a good idea. I went on to run the same on a beefy test environment, which has 2 test machines with tons of power to spare, and a choice of NICs connecting them together directly. The test machine is a dual socket beast so I took the opportunity to run on loopback across sockets:

Cross cores: mean=5809.40, 50%=6000, 99%=9000, 99.99%=23000

Cross sockets: mean=12393.97, 50%=13000, 99%=16000, 99.99%=29000Testing the connectivity across the network between the 2 machines I compared 2 different 10Gb card and a 1Gb card available on that setup, I won't mention make and model as this is not a vendor shootout:

Same socket, same core: mean=11976.68, 50%=12000, 99%=16000, 99.99%=28000

Same socket, cross core: mean=7663.82, 50%=8000, 99%=11000, 99.99%=23000

10Gb A: mean=19746.08, 50%=18000, 99%=26000, 99.99%=38000The above variations in performance are probably familiar to those who do any amount of benchmarking, but may come as a slight shock to those who don't. This is exactly what people mean when they say your mileage may vary :). And this is without checking for further variation by JDK version/vendor, OS etc. There will be variation in the performance depending on all these factors which is why a baseline figure taken from your own environment can provide a useful estimation tool to performance on the same hardware. The above also demonstrates the importance of process affinity when considering latency.

10Gb B: mean=30099.29, 50%=30000, 99%=33000, 99.99%=44000

1Gb C: mean=83022.32, 50%=83000, 99%=87000, 99.99%=95000

Conclusion

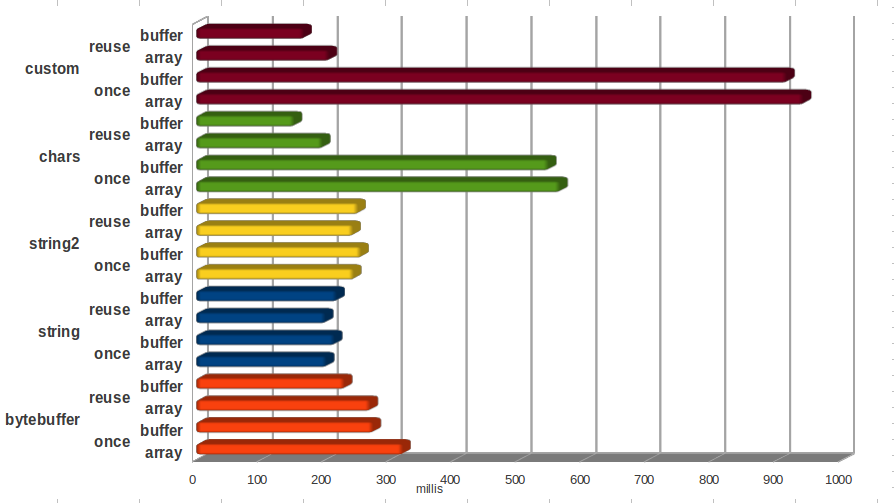

An average RTT latency of 20 microseconds between machines is pretty nice. You can do better by employing better hardware and drivers(kernel bypass), and you can make your outliers disappear by fine tuning JVM options and the OS. At it's core Java networking is pretty darn quick, make sure you squeeze all you can out it. But to do that, you'll need a baseline figure to let you know when you can stop squeezing, and when there's room for improvement.UPDATE(4/07/2014): I forgot to link this post to it's next chapter where we explore the relative performance of different flavours of the same benchmark using select()/selectNow()/blocking channels/memory mapped files as the ping transport, all nicely packaged for you to play with ;-).